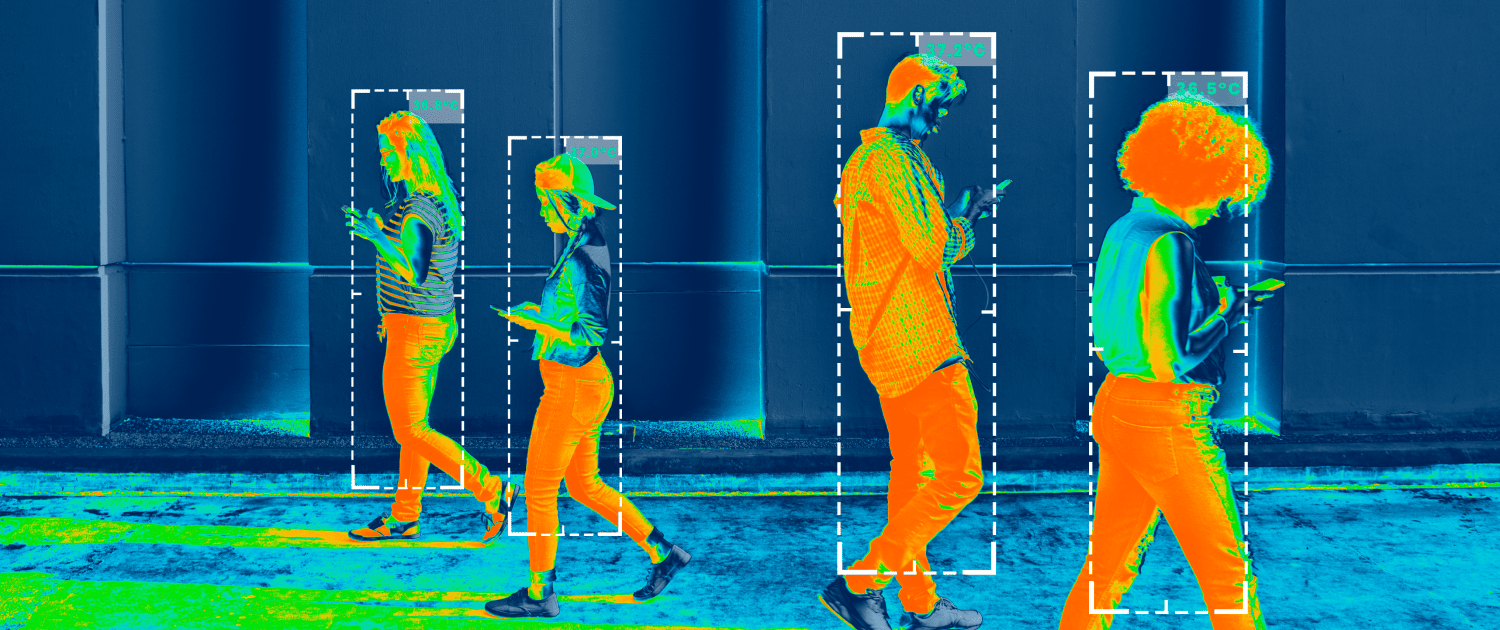

Computer vision software enables machines to interpret and analyze visual data, turning images and video into actionable insights. Its adoption is rapidly expanding across industries: from quality inspection in manufacturing to real-time surveillance and autonomous vehicles.

In this guide, you’ll learn what it takes to build a computer vision solution in 2025 – including system architecture, development timelines, tech stacks, hiring tips, cloud deployment strategies, and real-world costs.

Step-by-Step Computer Vision Software Development Process

Building a computer vision system is a multi-stage pipeline that requires precision at every step. Here’s how the process typically unfolds:

1. Problem Definition

Clearly defining the business problem and success criteria, whether it’s detecting defects on a production line or recognizing faces in security footage.

2. Data Collection

Gathering high-quality, relevant image or video datasets tailored to your use case. This may include public datasets, synthetic data, or custom image capture.

3. Data Annotation

Labeling the data to teach the model what to look for. This could involve bounding boxes, segmentation masks, or classification tags using tools like CVAT or Label Studio.

4. Model Selection & Training

Choosing the right architecture and training it. This step often includes hyperparameter tuning.

5. Evaluation & Testing

Validating model performance and testing it on unseen data to avoid overfitting.

6. Deployment

Exporting and integrating the model into a production environment. This could be in the cloud (AWS, GCP, Azure), on edge devices, or embedded systems. At this stage, MLOps becomes essential, ensuring smooth version control, continuous integration and deployment (CI/CD), performance monitoring, and scalability of your computer vision models in real-world applications.

7. Monitoring & Optimization

Tracking real-world performance, retraining with new data if necessary, and optimizing latency, throughput, or accuracy as usage scales.

Detailed Cost Components of Computer Vision Software

Creating and deploying custom computer vision software involves a range of interconnected cost drivers that span across development, data, infrastructure, and long-term maintenance. These costs can vary significantly depending on the complexity of the project, the level of accuracy required, and whether the solution is built from scratch or leverages existing tools and models.

Development costs

At the core are development costs, which include writing the code for computer vision models as much as designing the broader system (interfaces, APIs, data pipelines, and real-time integration). Using pre-trained models like YOLO or ResNet may reduce time and expense, but custom models tailored to specific tasks often require additional engineering and experimentation, increasing overall costs. Furthermore, decisions about where the system runs (on the cloud, on-premise, or at the edge) have major financial implications related to latency, scalability, and hardware needs.

Data

Another significant cost category is data, which includes the collection, cleaning, and annotation of visual datasets. High-quality labeled data is essential for training effective models, and while open datasets can help, most applications demand custom datasets gathered in specific environments. Annotation, particularly for complex tasks like segmentation or object tracking, can be time-consuming and expensive. Synthetic data generation using 3D environments or simulations is an alternative, though it requires specialized tools and expertise.

Infrastructure and computing resources

Training vision models at scale demands powerful GPUs or TPUs, often rented from cloud providers such as AWS, Google Cloud, or Azure. Cloud platforms offer flexible, on-demand access to high-performance computing without the upfront capital expenditure of building physical infrastructure. However, these services can become costly over time, especially during prolonged model training, frequent batch inference, or continuous video stream processing.

Deploying computer vision in the cloud also involves provisioning storage for large datasets, setting up scalable inference pipelines, and ensuring low-latency responses for real-time applications. Organizations often adopt containerization (e.g., Docker) and orchestration tools (e.g., Kubernetes) to manage deployments efficiently, while edge-cloud hybrid architectures are used when some processing must happen locally. As the system matures, operational tools like MLOps platforms are crucial for automating version control, A/B testing of models, monitoring for anomalies, and triggering retraining workflows.

Over time, organizations must also plan for model maintenance, adapting to data drift, addressing performance degradation, and updating systems to meet evolving business goals. The dynamic nature of production environments means that cloud-based systems must be built for resilience, scalability, and compliance with data privacy standards, each adding layers of complexity and cost.

Long-term maintenance

Lastly, there are ongoing expenses tied to software licenses, SaaS tools, support, and personnel. From open-source frameworks to enterprise-level annotation platforms and cloud APIs, licensing and subscription fees can quickly accumulate. Skilled professionals (ML engineers, software developers, data annotators) are essential for both the initial launch and the system’s evolution, making staffing a major and recurring investment.

Computer Vision System Architecture: Hardware & Software Stack

Behind every successful computer vision system is a combination of hardware components and software tools.

Hardware Essentials for Computer Vision

The most common hardware used includes:

- Graphics Processing Units (GPUs) (essential for parallel processing of images and accelerating neural network training)

- Edge Devices (for real-time processing at the edge like cameras, mobile phones, robots, or embedded systems)

- High-performance CPUs & RAM. Image preprocessing, data augmentation, and some inference tasks still rely on strong CPUs and sufficient memory (especially during batch operations).

- Camera & Sensor Systems

Computer Vision Software

Software is what powers critical capabilities like facial recognition, object detection, and image classification. Without it, hardware components such as cameras and chips can’t function effectively.

Here’s a look at the core layers of the computer vision software stack:

- Programming Languages (Python, C++)

- Frameworks & Libraries (OpenCV, PyTorch / TensorFlow, Detectron2 / MMDetection, MediaPipe, Albumentations, ONNX Runtime)

- Model Training & Deployment Tools (Weights & Biases, MLflow, NVIDIA TensorRT / OpenVINO, Docker & Kubernetes)

- Cloud & Storage Services:

- For large-scale data storage and remote model training: AWS S3, GCP Cloud Storage, Azure Blob Storage

- For model training and deployment:

- AWS EC2 (with GPU), Google Colab Pro, Azure ML Studio

- GCP Vertex AI (for managed model training, deployment, and MLOps)

- (Optional: AWS SageMaker for end-to-end machine learning, including computer vision)

- For model serving and scalable deployment: Docker & Kubernetes

If you want to understand how CV is working or eager to explore how different sectors are using it today, check out our earlier article: How Does Computer Vision Work? 6 Real-World Use Cases by Industry.

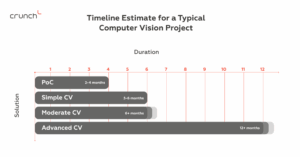

How Long Does Computer Vision Software Development Take?

One of the most common questions we hear from companies exploring AI solutions is: “How long will it take to build this?” But there’s no universal answer. Development time depends heavily on your project’s complexity, data availability, deployment method, and team composition.

Here’s a rough timeline estimate for a typical CV project:

- PoC: 8-16 weeks

- Simple CV app production ready (like barcode scanning, OCR, basic classification): 3-6 month

- Moderate CV system production ready (like face detection, and object detection): 6+ months

- Advanced CV solution production ready (custom models, real-time tracking, 3D vision): 12+ months

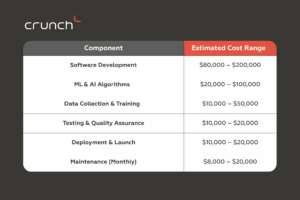

How Much Does It Cost to Build Computer Vision Software?

Many businesses hesitate to explore computer vision solutions, fearing prohibitive costs. Is computer vision expensive? With strategic planning and the right partnerships, implementing such technology can be more accessible than anticipated.

As an example, we will share a case of a French AI startup that collaborated with us to enhance their autonomous security robot with real-time face and person detection. The project successfully integrated advanced features such as gate detection and spatial awareness. The positive reception at the tech conference both validated the solution and attracted investor interest, demonstrating the project’s value and potential for scalability.

Estimated cost breakdown of a custom computer vision project:

It’s important to factor in cloud service expenses, which can vary significantly based on the amount of data processed, storage needs, and your choice of cloud provider.

Considering the project’s complexity and the integration of advanced features, the total cost of custom computer vision software development likely falls within the $100,000 to $350,000 range. However, if you’re starting with a MVP, costs can start from around $80,000.

Developing a feature-rich mobile application can cost between $100,000 and $200,000. Hiring a full-time experienced software developer in the U.S. averages around $120,000 annually.

Given these figures, investing in a tailored computer vision software solutions can be a cost-effective strategy, especially when considering the potential for automation, enhanced security, and operational efficiency.

If you have a need for custom computer vision software, you can contact our experts they will estimate your project.

Hiring a Computer Vision Engineer: What Skills are Needed for Computer Vision?

When building a computer vision team, you need someone with a strong command of fundamental techniques, a sharp eye for model performance, and the ability to deploy solutions in real-world environments. Here’s a breakdown of the core competencies to prioritize when you decide to hire computer vision engineers:

Core Programming & Framework Proficiency

At the heart of any computer vision role lies robust programming capability. The candidate should be fluent in Python, C++, OpenCV, and familiar with TensorFlow or PyTorch.

Deep Learning Expertise

As we already know, modern computer vision tasks are largely powered by deep learning. Look for candidates with:

- a strong grasp of Convolutional Neural Networks (CNNs) and their architectures

- hands-on experience with image classification, image segmentation, and the object detection software

- understanding of transformer-based models

Computer Vision Fundamentals

- Image processing techniques (filtering, edge detection, noise reduction)

- Data augmentation and preprocessing

- Feature extraction and matching (using techniques like SIFT or ORB)

- Exposure to optical flow, stereo vision, and 3D vision applications

Data & Model Management

An effective CV engineer knows how to evaluate model performance meticulously, has expertise in model evaluation metrics, and practical experience in dataset preparation. Plus has familiarity with annotation tools like CVAT, Label Studio, or Roboflow.

Model Deployment Skills

And of course, they also have to know how to deploy computer vision models. Some important skills include:

- the ability to export and optimize models with formats like ONNX or frameworks like TensorRT

- knowledge of edge computing for on-device inference

- experience integrating models into applications

- and to build scaling computer vision software, an engineer must have proficiency in using cloud platforms (AWS, Azure)

Soft Skills

And I will shortly mention the importance of soft skills. Think critically about what your team values most: is it communication? ownership? agility? Your workspace culture should guide your final decision.

Need help assembling your computer vision dream team? If you’re seeking an experienced partner, our team can support you with expert talent or end-to-end development. Book a free consultation call to hire computer vision experts.

Conclusion

Computer vision is an active driver of innovation across nearly every industry. But building a successful computer vision solution in 2025 demands the right mix of strategy, talent, infrastructure, and execution.

Whether you’re a startup founder planning your first MVP or an enterprise leader looking to automate visual tasks at scale, knowing what goes into these systems is key to setting realistic expectations and avoiding costly missteps.

With our proven experience and tailored approach in delivering custom computer vision development services, your business can harness the power of visual intelligence.

Talk to us today for a personalized project estimate.

![How Much Does It Cost to Build AI Solutions In 2025 [Ultimate Guide]](https://crunch.is/wp-content/uploads/2024/06/steve-johnson-1FD-E7Ioblw-unsplash-scaled.jpg)